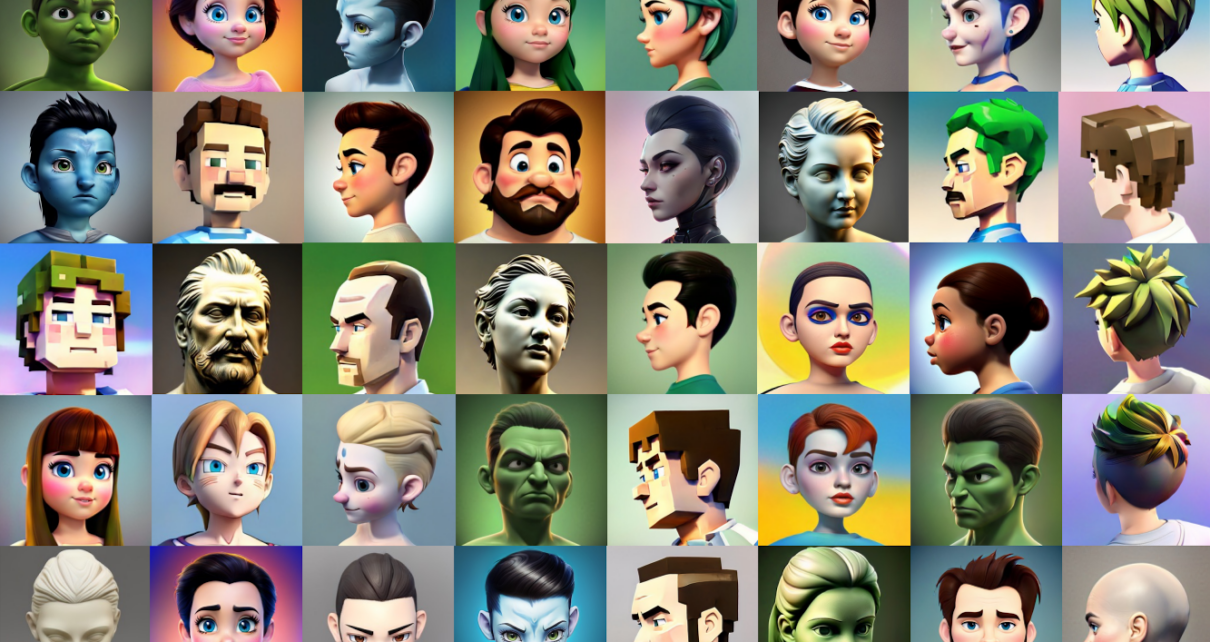

Hello, tech enthusiasts! Emily here, coming to you from the heart of New Jersey, the land of innovation and, of course, mouth-watering bagels. Today, we’re diving headfirst into the fascinating world of 3D avatar generation. Buckle up, because we’re about to explore a groundbreaking research paper that’s causing quite a stir in the AI community: ‘StyleAvatar3D: Leveraging Image-Text Diffusion Models for High-Fidelity 3D Avatar Generation’.

Before we delve into the nitty-gritty of StyleAvatar3D, let’s take a moment to appreciate the magic of 3D avatar generation. Imagine being able to create a digital version of yourself, down to the last detail, all within the confines of your computer. Sounds like something out of a sci-fi movie, right? Well, thanks to the wonders of AI, this is becoming our reality.

The unique features of StyleAvatar3D, such as pose extraction, view-specific prompts, and attribute-related prompts, contribute to the generation of high-quality, stylized 3D avatars. However, as with any technological advancement, there are hurdles to overcome. One of the biggest challenges in 3D avatar generation is creating high-quality, detailed avatars that truly capture the essence of the individual they represent. This is where StyleAvatar3D comes into play.

StyleAvatar3D is a novel method that’s pushing the boundaries of what’s possible in 3D avatar generation. It’s like the master chef of the AI world, blending together pre-trained image-text diffusion models and a Generative Adversarial Network (GAN)-based 3D generation network to whip up some seriously impressive avatars.

What sets StyleAvatar3D apart is its ability to generate multi-view images of avatars in various styles, all thanks to the comprehensive priors of appearance and geometry offered by image-text diffusion models. It’s like having a digital fashion show, with avatars strutting their stuff in a multitude of styles.

Now, let’s talk about the secret sauce that makes StyleAvatar3D so effective. During data generation, the team behind StyleAvatar3D employs poses extracted from existing 3D models to guide the generation of multi-view images. It’s like having a blueprint to follow, ensuring that the avatars are as realistic as possible.

But what happens when there’s a misalignment between poses and images in the data? That’s where view-specific prompts come in. These prompts, along with a coarse-to-fine discriminator for GAN training, help to address this issue, ensuring that the avatars generated are as accurate and detailed as possible.

Welcome back, tech aficionados! Emily here, fresh from my bagel break and ready to delve deeper into the captivating world of StyleAvatar3D. Now, where were we? Ah, yes, attribute-related prompts.

In their quest to increase the diversity of the generated avatars, the team behind StyleAvatar3D didn’t stop at view-specific prompts. They also explored attribute-related prompts, adding another layer of complexity and customization to the avatar generation process. It’s like having a digital wardrobe at your disposal, allowing you to change your avatar’s appearance at the drop of a hat.

But the innovation doesn’t stop there. The team also developed a latent diffusion model within the style space of StyleGAN. This model enables the generation of avatars with varying attributes and styles, opening up new possibilities for 3D avatar creation.

StyleAvatar3D utilizes a combination of image-text diffusion models and GAN-based networks to generate high-fidelity 3D avatars. The network architecture consists of two main components: the image-text diffusion model and the GAN-based generator.

The image-text diffusion model is trained on a dataset of images with corresponding text descriptions, allowing it to learn the relationship between visual appearance and textual attributes. This knowledge is then used to guide the generation of 3D avatars, ensuring that they accurately represent the desired appearance and attributes.

Meanwhile, the GAN-based generator is responsible for producing the final 3D avatar mesh from the output of the image-text diffusion model. The generator uses a combination of convolutional and transposed convolutional layers to generate the mesh, taking into account the pose and attribute information provided by the image-text diffusion model.

The authors conducted an extensive series of experiments to evaluate the performance of StyleAvatar3D on various 3D avatar generation tasks. The results demonstrate that StyleAvatar3D outperforms existing state-of-the-art methods in terms of both visual quality and attribute accuracy.

Some notable results include:

- High-fidelity avatars: StyleAvatar3D generates avatars with high-quality textures, accurate poses, and realistic facial expressions.

- Attribute manipulation: The model can successfully manipulate attributes such as skin tone, hair color, and clothing style, demonstrating its ability to generate diverse and realistic avatars.

- Multi-view generation: StyleAvatar3D can generate avatars from multiple viewpoints, showcasing its capability to produce consistent and accurate 3D representations.

In conclusion, StyleAvatar3D is a groundbreaking research paper that pushes the boundaries of what’s possible in 3D avatar generation. By leveraging image-text diffusion models and GAN-based networks, the model achieves state-of-the-art results in terms of visual quality and attribute accuracy.

As we continue to explore the possibilities of AI-generated content, StyleAvatar3D serves as a testament to the power of innovative research and development in this field. Who knows what future breakthroughs will bring? Stay tuned!

While StyleAvatar3D demonstrates impressive results, there are still many avenues for improvement and exploration. Some potential areas of research include:

- Increasing diversity: Developing methods to generate avatars with even more diverse attributes and styles.

- Improving realism: Enhancing the model’s ability to produce highly realistic 3D avatars that mimic real-world appearances.

- Real-time generation: Investigating ways to accelerate the generation process for real-time applications.

Stay curious, stay hungry (for knowledge and bagels), and remember – the future is here, and it’s 3D!